Apple is dramatically improving Maps. Combined with new high-precision GPS and augmented reality, the company could change mobile navigation forever.

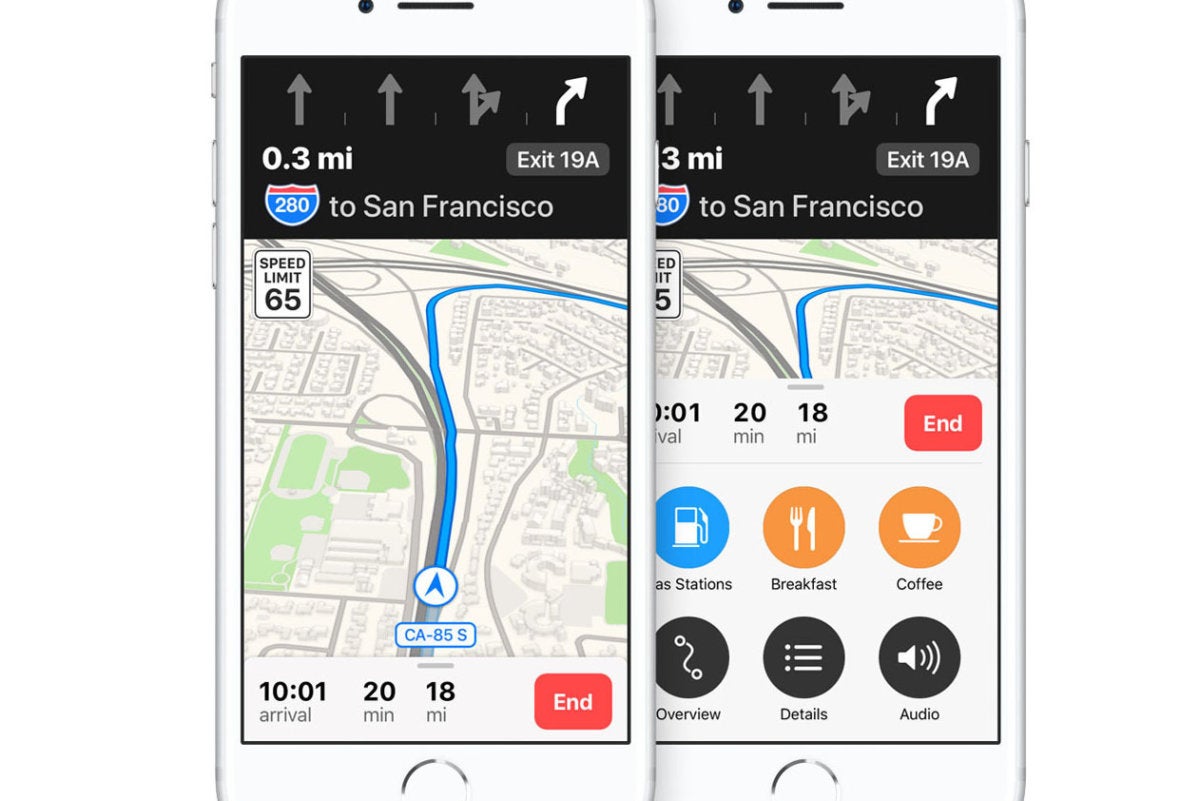

Apple is improving Maps in a big way. More precise and accurate data, better design and readability, faster data updates…it’s a huge project that should make Maps ten times better than it is today, and it will start rolling out this fall.

But the new maps are just the tip of the iceberg. Reading the tea leaves and projecting just a little bit into the future, it’s easy to imagine a mapping and location experience that makes today’s mobile mapping look like MapQuest circa 2002.

When we look at recent advancements in mapping data, GNSS (Global Navigation Satellite System) technology, and Augmented Reality, a picture starts to form of mobile navigation unlike anything we have today.

GPS supercharged

A key component of the future of navigation is ultra-precise GNSS (a catch-all term for satellite navigation systems like GPS, GLONASS, Galileo, and Baidu). Today’s phones support multiple GNSS systems, but they’re only accurate to within 5 meters or so. If you’re walking down a city street, your phone may combine GNSS data with a catalog of known Wi-Fi hotspots and other markers to improve accuracy, but it only goes so far.

It’s not uncommon today for your phone to think you’re actually in the shop next door, or on the wrong side of your office floor. And forget about actually knowing which side of the street you’re on, or which lane you’re driving down on the highway.

Advanced GNSS chips offer far greater precision; we’re talking about one or two feet. Last year, Broadcom announced its BCM47755 chip, which uses lesspower than current GNSS chips and can connect to and compare two frequencies at the same time each from GPS, Galileo (European system), and QZSS (Japanese system). By combining and comparing two frequencies at once, Broadcom says it can achieve “centimeter accuracy” in a device that is efficient enough even for fitness wearables.

Of course, one can hardly expect real-world accuracy to be what is claimed in a press release, but even if it’s thirty times worse—thirty centimeters!—you’re looking at precise location to within one foot.

Hey, you know who happens to use Broadcom GPS chips? Apple. Broadcom said the BCM47755 chip would start appearing in phones in 2018, but has only shipped in one phone so far: the Xiaomi Mi 8. And it’s not entirely clear if the firmware and software APIs in that phone are making this high-precision positioning data available to developers yet.

Imagine if the GPS on your phone could reliably know which lane you’re in on the highway? Which aisle you’re walking down in the supermarket? The exact row in which you’re seated in a stadium?

Combined with super-precise maps, that kind of resolution changes everything.

Building Maps for the future

Apple is about to completely overhaul its mapping data. Beginning with the San Francisco bay area in the next iOS 12 beta, expanding to all of Northern California by the fall, and rolling out to other regions over the coming year, Apple Maps will start to use data from a years-long project to take complete control over the mapping and location data stack.

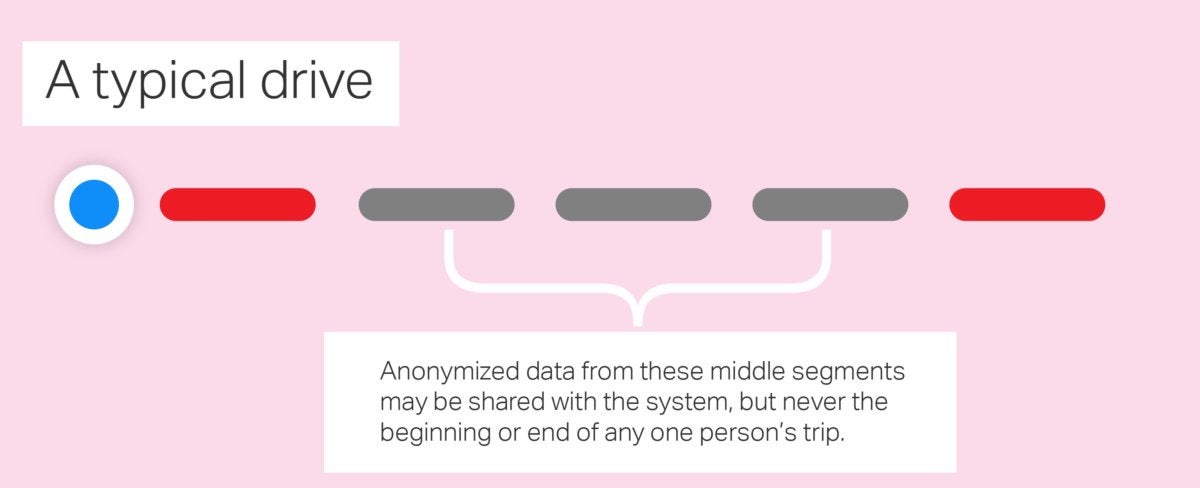

Until now, Apple Maps has relied on a giant mish-mash of third-party data, and sometimes it takes forever for changes or corrections to that data to be made by those companies. Starting this fall, Apple will replace that with its own data, gathered by fancy camera-and-LIDAR-equipped trucks, satellite imagery, and for the first time ever, safely anonymized data from millions of iPhone users.

It’s not just going to be vastly more accurate, more reliable, and more rapidly updated. It’s also going to be extremely precise. That will vastly improve the current Maps experience, but when combined with a hypothetical future iPhone that has location data accurate to less than one meter, it’s easy to see how Apple is building (and for the first time, fully controlling) a set of location data for the future, not just for the way we use our phones today.

Imagine driving down the highway and being told not just what your next turn is or which lane you need to be in, but getting individualized guidance based on knowing which lane you’re currently in. Maps’ driving directions could tell you to “move two lanes to the right. Or, if you’re in the correct lane already, say nothing at all or just prompt you to “stay in this lane.” It could help you avoid slow traffic by knowing that, five miles ahead, the left lane is moving freely while the right two lanes are very slow, and you should move over now. With millions of iPhones anonymously feeding sub-meter location data to Apple’s server, Maps could tell you not just where traffic is slow, but how traffic is slow.

Can’t find the sprinklers in your local Lowe’s? After looking around for a few minutes of frustration, pull up your phone and ask "Hey Siri, were are the sprinklers?" It knows not only what store you're in, but has precise enough location data to see you're walking down aisle three. It tells you to turn right, then go down four more aisles to aisle seven, where sprinklers will be on your right.

Imagine getting walking directions that can tell you when you need to cross the street because it knows you’re on the opposite side from the store you’re looking for. It knows the entrance is around the corner and down the alley, and gives you step by step directions that guide you right to it.

You arrive at the football game and don’t know how to get to section C, row 37, seat 14. Fortunately, your iPhone already knows what seat you’re in (thanks to the ticketing app or email confirmation), and as soon as you arrive at the stadium, Siri prompts you to ask if you want directions. With precise plans of arenas and event venues, combined with a phone that knows its location within a couple feet, your phone could give you step-by-step walking directions that take you to your exact seat. No need to stare at your phone while in a big crowd, either. Your Apple Watch will tap your wrist and give you directions each step of the way.

These scenarios don’t need to be years in the future. The fundamental technologies are either already here or imminent. With the right priorities and structures in place, Apple could give us all these capabilities and more when iOS 13 ships next year.

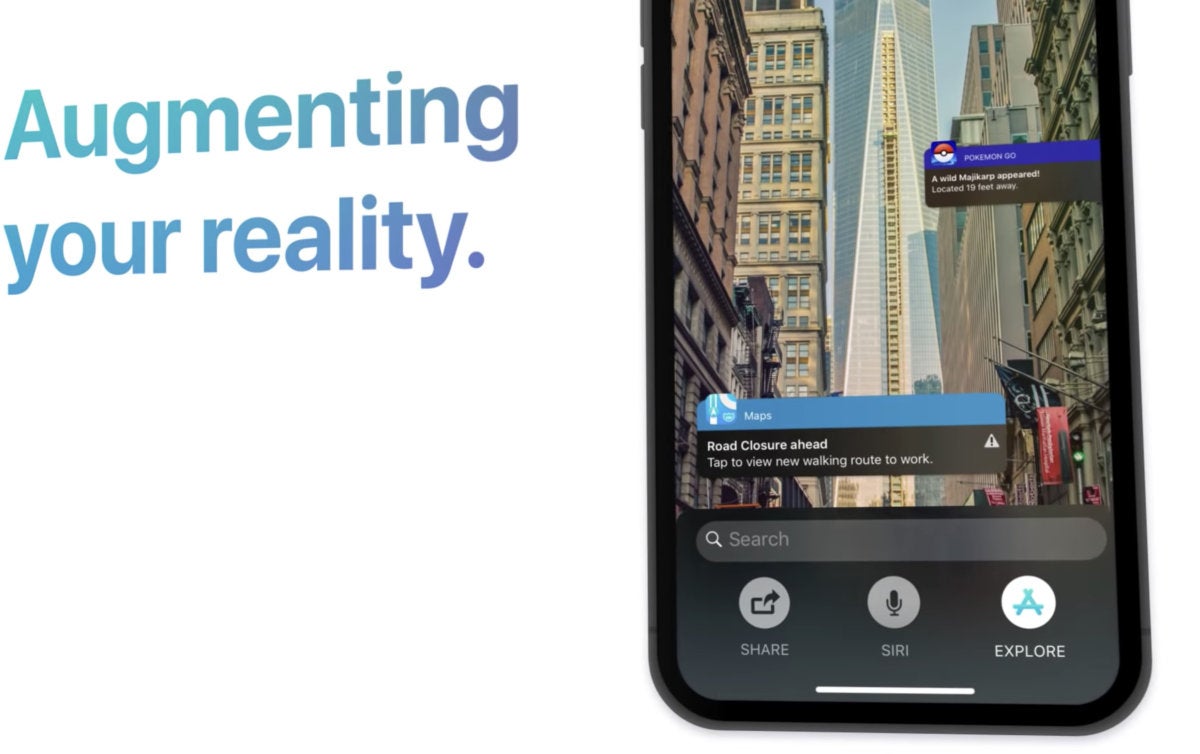

Just add Augmented Reality

As amazing as a mapping and directions at sub-meter scale could be, it’s nothing compared to what you get when you layer Augmented Reality on top of it. Tim Cook has, on multiple occasions, said that AR is going to be “profound” and “change everything.” It’s not just about measuring objects or playing multiplayer games.

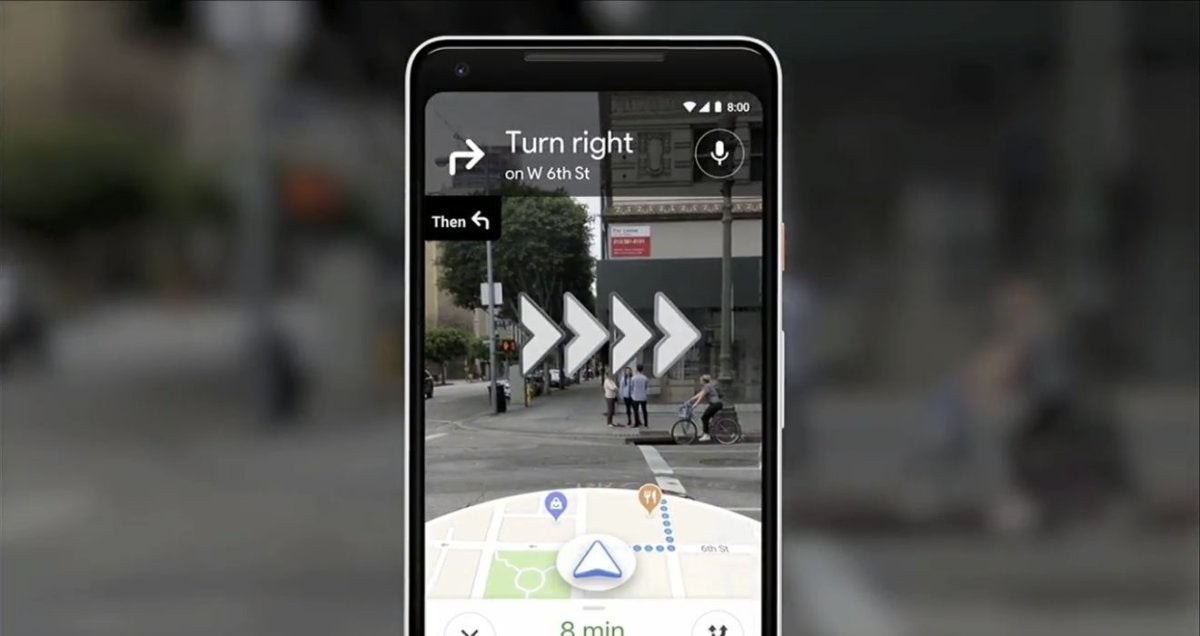

Take all the advanced mapping scenarios I mentioned before, and overlay directions, location icons, arrows, and more onto the real world. Pull up your phone and point it at the rows of seats in the football stadium and see a bright beacon of light shining down on section C, row 37, seat 14.

You ask your phone for recommendations of a good place to get lunch nearby, and when you hold up your phone, restaurant rating cards are superimposed over the real world, each one precisely located right where the restaurants are. Tap the one you want and a helpful guide arrow leads you around the corner, across the crosswalk, up the street, and then down the alley. It keeps you on the sidewalk sounds an alert through your AirPods if you step off the curb onto the street because you weren’t paying attention. You never would have found Little Skillet without it!

Getting directions has long been heralded as an obvious use case for augmented reality—following directions is inherently easier when they’re superimposed onto the real world—but to really make it truly work great, you need precision that just isn’t available today. You need mapping data that knows exactly where things are, and to know where the user is, to within a few feet.

Combine future iPhones that use Broadcom’s super-precise GNSS with ARKit and advanced new mapping data that built from incredibly precise data, human editors, and information pulled from hundreds of millions of iPhones, and you’ve got a recipe for truly a truly next-generation mapping experience. Because Apple has such reach and controls both the hardware and software, maintains its own maps platform, has the most advanced phone-based AR platform, and is amassing super-precise location data, it is uniquely positioned to bring these experiences to the mass market before anyone else.

Comments

Post a Comment